AI weather models are bringing a new paradigm to weather forecasting. Highlighting them in our AI series, we have observed competitive performance compared to numerical weather prediction in some cases. However, we have also seen that they are not necessarily better in all cases – they can be worse in extreme conditions and also blurrier with increased lead times.

Recall that AI weather models are trained. The source of truth for these models is typically a large¬-scale dataset like the ECMWF Reanalysis dataset version 5, also known as ERA5. Its data is generated by a physical weather model using a process called data assimilation. In other words, by using observations valid for that time, the best possible complete initial state of the atmosphere is produced for some time T. AI weather models, trained on such large-scale datasets often spanning decades, thus still have a dependency on physics-based numerical weather models. The question in many research communities these days is: can we reduce this dependency? Is it possible to integrate data assimilation with AI weather models and thus use observations more directly?

In this article, look at this in more detail, by highlighting the state-of-the art in AI-based data assimilation into AI-based weather models. Firstly, we’ll briefly touch on the topic of observations and using them for weather prediction via data assimilation – i.e., what is it? – which builds the necessary context. Then, we look at two approaches integrating data assimilation with AI models – the first is integration through predicting an NWP analysis from observations and background field, the second through direct prediction of how the background field adapts given the observations.

How observations are used in weather forecasting

Predicting the weather starts with observations. This has been true for ages, with e.g. Dutch boerenwijsheden (literally translated as farmers’ wisdoms) connecting observations to short-term changes in weather conditions bearing relevance to their crops. However, observations are also critical for making weather predictions with weather models. As they compute the current (also known as initial) state of the atmosphere forward in time, they need to know what to start with.

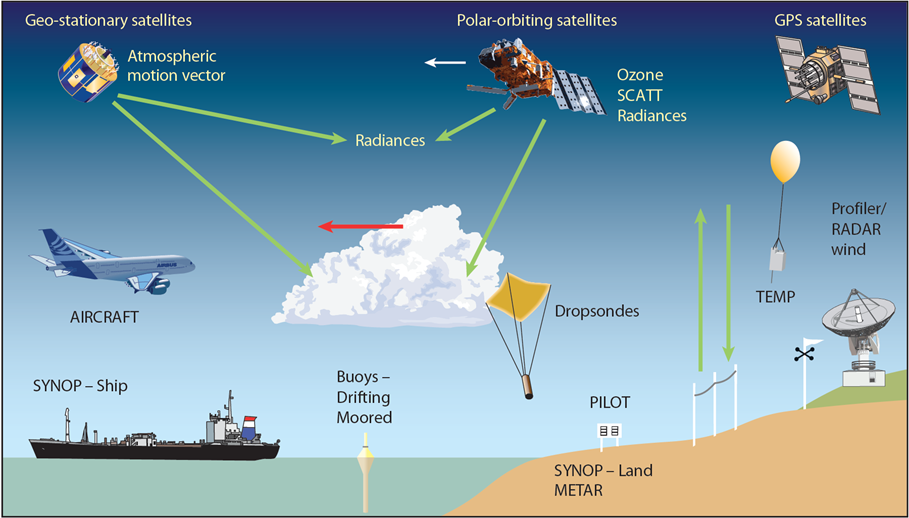

Fortunately, today, there are many observation sources. For example, satellites are orbiting Earth, taking measurements about e.g. clouds and atmospheric gases for the entire globe. Similarly, weather stations around the world measure surface-level weather, weather balloons capture the atmospheric profiles with altitude, and even commercial aircraft are playing their part (WMO, 2023). Figure 1 illustrates these and other sources of observational data.

Figure 1: A large variety of observation sources exists. Source and copyright: ECMWF, licensed under the Creative Commons Attribution 4.0 International Public License. No changes were made.

Even though the variety and volume of observations is large, they do not capture Earth’s atmosphere entirely. For example, aircraft measure only at the exact position and altitude they are flying at, while polar-orbiting satellites make measurements in strips (so-called swaths). Another example is geostationary satellites: while they have large horizontal coverage, they (as well as polar-orbiting satellites) have limited measuring capabilities for the various atmospheric layers. In other words, each observation source adds value, but comes with its own set of limitations. Still, the signal present in these observations as to global weather is already very useful for weather prediction – as we can assimilate these observations into a weather model.

Data assimilation

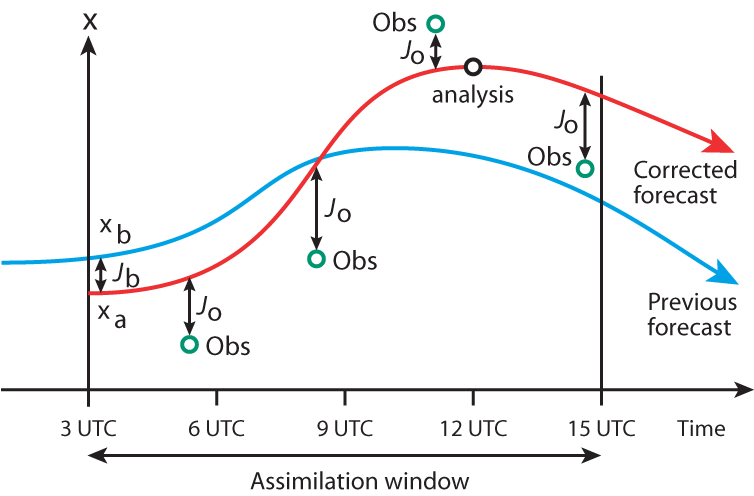

Now that we better understand the variety and volume of observations, it’s time to look at how weather models use them – via a process called data assimilation. The goal of this process is to start a new forecast run with the best possible current atmospheric state given the observations that are available. However, we’ve just learned that observations aren’t fully covering the Earth in its horizontal and vertical dimensions – but we need data for the entire Earth. What’s more, we also want to bring our observations into the weather model framework. How can we proceed to generate this state, which is also known as initial conditions?Today, most numerical weather models use a technique called 4D variational data assimilation (or 4D-VAR for short). Recognizing that observation sources are not distributed evenly, and that neither timestep T from a previous forecast can be used due to accumulated errors, 4D-VAR uses the best of both, as visualized in figure 2. 4D-VAR starts with a previous forecast (also known as a background field), for some variable x illustrated in blue. In a short-term window where observations are available (the assimilation window, in this case 12 hours long), 4D-VAR adjusts the forecast by finding the initial conditions which produces the lowest error for two penalty functions:

1. The corrected forecast should look as much like the previous forecast as possible.

2. The corrected forecast should be as close to the observations as possible.

This leads to the best possible middle ground, covering the gaps in observations while using them to steer the initial conditions to represent the current state of the atmosphere as best as possible. This initial time step T is also called the analysis and the weather model uses it to compute the weather forecast, either via physics (in the case of NWP) or via its learned parameters (in the case of AI-based weather prediction).

Figure 2: An example of data assimilation. Source and copyright: ECMWF, licensed under the Creative Commons Attribution 4.0 International Public License. No changes were made.

Assimilating observations data into AI weather models

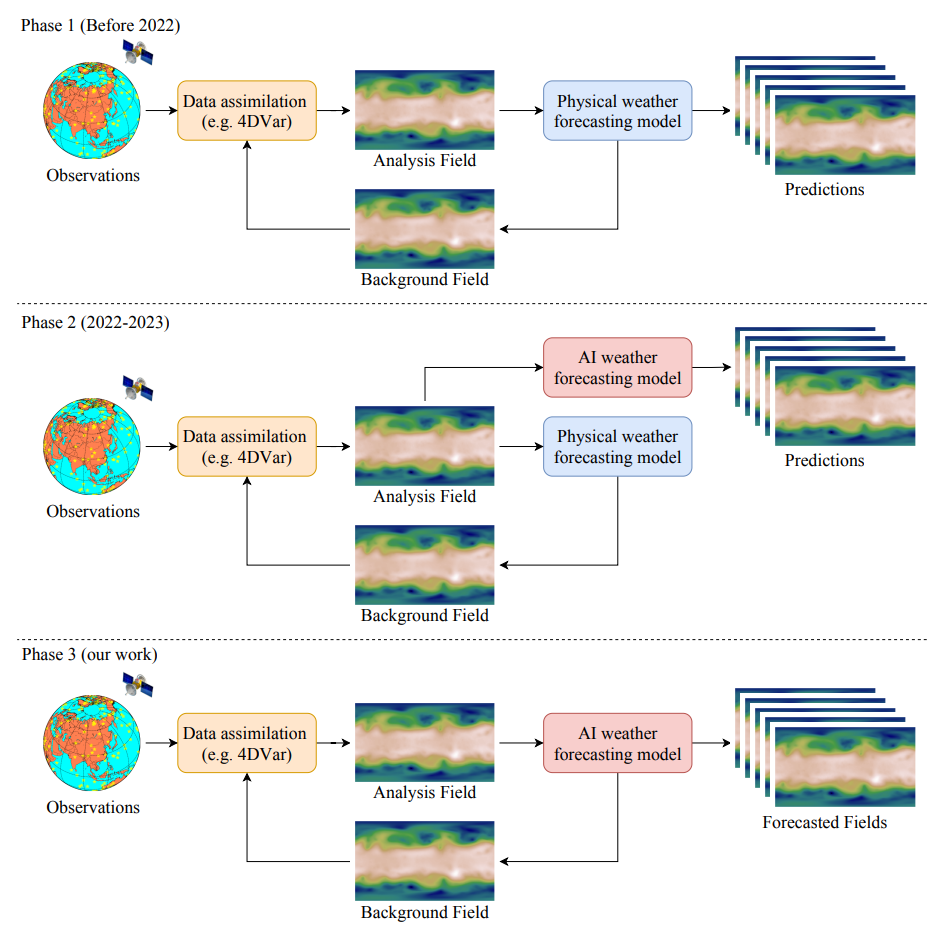

When it comes to assimilating observations into numerical and AI-based weather models, Xiao et al. (2023) suggest that developments in recent years have occurred in multiple phases, as illustrated in figure 3: phase 1 being the traditional NWP setup, phase 2 illustrating how AI models have benefited from NWP analyses and phase 3, where data assimilation is integrated with the AI-based weather model end-to-end.

In the first phase, using data assimilation techniques discussed before, an analysis field is produced. The numerical weather model uses it to make predictions. Then, the forecast with the same time as the initial time step of a future forecast (the background field!) is used to perform data assimilation for the next forecast run. Subsequently, using the new analysis, a new forecast is made. This cyclical process continues endlessly.

In the second phase, numerical weather models are replaced by AI-based weather models. However, these models still rely on large-scale datasets generated with data assimilation via physical weather forecasting models, such as the ERA5 dataset. However, they use this large-scale data for training, meaning that – when trained – they only need a new analysis (typically also from an NWP model) to start generating predictions. Currently, all of the first-generation and second-generation do not assimilate observations directly; they are phase 2 models.

Finally, phase 3 models are full-AI based weather models, meaning that data assimilation techniques are incorporated in the forecast generation pipeline. This can be done in multiple ways. One way is to train AI models that can assimilate certain data sources into NWP-like analyses (which could be seen as phase 2.5, if you will). Another one is to train models to produce full-AI analyses directly from observations. This could potentially lead to better initial states and thus to better forecasts. Let’s now look at a few recent works to understand better what’s possible today.

Figure 3: from NWP-based assimilation to AI-based assimilation. Image from Xiao et al. (2023).

Assimilating data sources using NWP-like analysis data

In our article about second-generation AI models, we introduced the FuXi model, which effectively combines three models specializing on time horizon (one for the short term, one for the medium term and one for the long term). In Xu et al. (2024), a new model is trained which learns to assimilate observations of the Advanced Geosynchronous Radiation Imager (AGRI) on board the Fengyun-4B satellite into FuXi-generated background fields. To decompose this complex phrase, this is what is happening:

1. The authors use FuXi to generate a forecast for T+6 hours, which then serves as the background field for the new forecast run starting at T+6 hours.

2. They feed AGRI-based satellite observations for the period up to T+6 hours to a data assimilation model together with the background field. This data assimilation model is trained using background fields and satellite observations as input data and ERA5 analyses as targets. The training procedure minimizes deviations from observations and background field, so it can be conjectured that it is similar to 4D-VAR, although implicitly.

3. The assimilation model produces the analysis. It is thus the output of FuXi combined with the satellite observations. It is then used by FuXi for generating the T+6 hours forecast run.

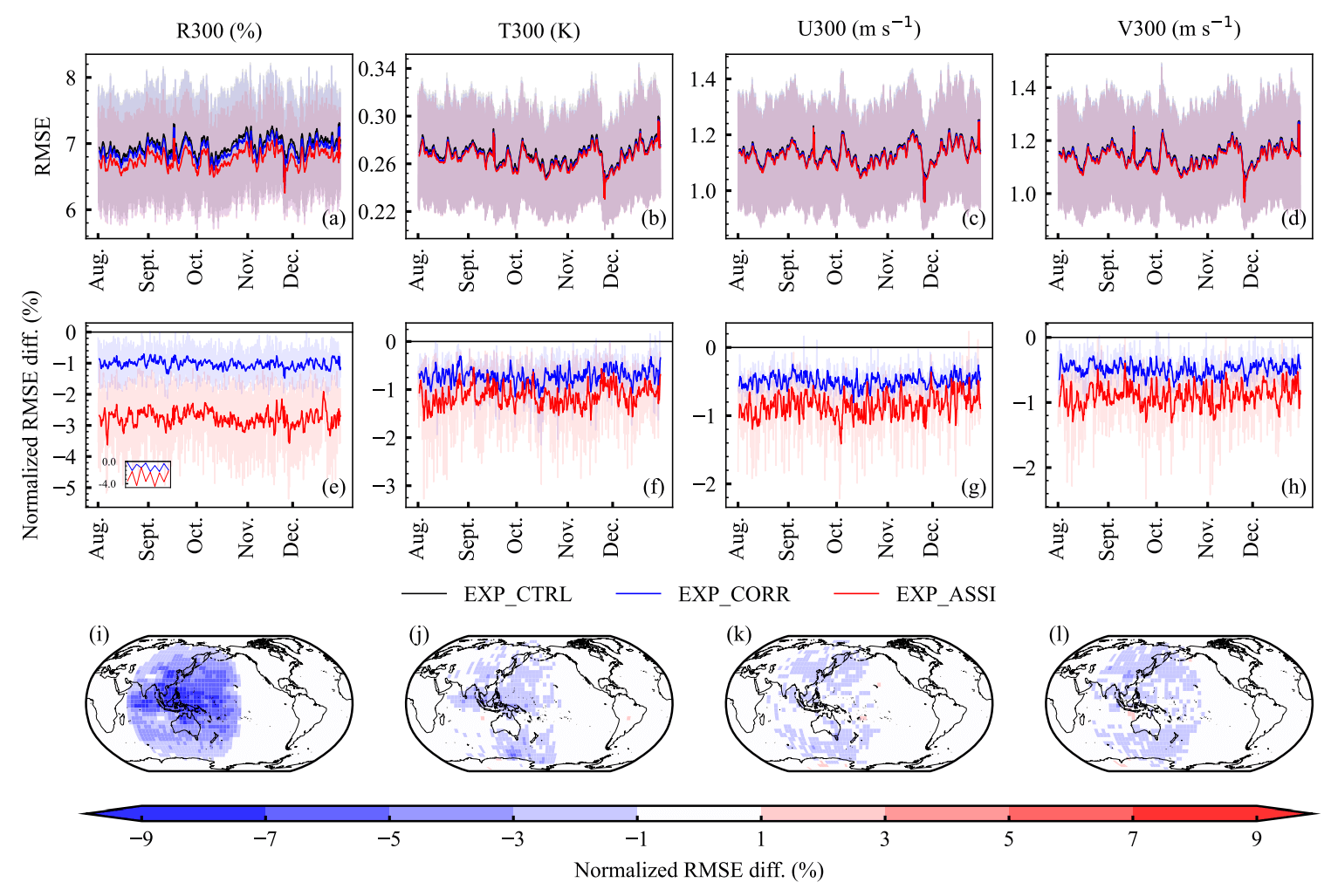

Figure 4: Assimilating AGRI data into the FuXi background field does seem to reduce average errors (Root Mean Squared Error, RMSE) notably in the domain of the satellite data. It is no zero-sum improvement: the results suggest that errors elsewhere are not or only scantily increasing. Image from Xu et al. (2024).

Other approaches that work in similar ways are DiffDA (Huang et al., 2024) which uses the GraphCast model, and the approach proposed by Manshausen et al. (2024), which attempts to assimilate sparse observations data into the US-based High-Resolution Rapid Refresh (HRRR) model used for short-term weather forecasting. Similarly, FengWu-4DVAR (from Xiao et al., 2024) finds opportunities for directly assimilating observations into analyses, even though ERA5 data is used as the ground truth during training.

Despite the average improvements, these approaches do not entirely remove the dependency on NWP-based analyses. Recall that FuXi is first used to generate a forecast for T+6 hours; the input data to FuXi for doing this is a recent NWP-based analysis. The same is true for the others, which either use inputs with dependencies on NWP models (as the background fields) or outputs with similar dependencies (typically, ERA5 data) for training the assimilation model. Even though this already makes assimilation possible, let’s now dive a bit deeper to see if we can remove the dependency altogether: thus, assimilate data directly into the AI-based model.

Producing AI-based analyses directly from observations

In June 2024, the German Weather Service published a paper which claims to make this possible: “[u]nlike previous hybrid approaches, our method integrates the data assimilation process directly into a neural network, utilizing the variational data assimilation framework” (Keller et al., 2024). In simple terms: using AI models to assimilate data into an analysis, “enabling it to perform data assimilation without relying on pre-existing analysis datasets”.

Their approach, named AI-VAR, works differently compared to other approaches, as only the background field and a set of observations is used. Its output is an analysis increment: how much should the background field change to accommodate for the provided observations to represent the current weather as best as possible? Contrary to learning the analysis, the neural network learns to minimize the distance between the background field and the output as well as between the observations and the output. This looks very much like variational data assimilation as done in numerical weather models. What’s more, what used to be done implicitly in approaches like DiffDA and FuXi-DA is now made explicit: the model learns the assimilation mapping rather than mapping inputs to outputs. Using the output is simple: by applying the analysis increment to the background field, one obtains the analysis that can be used for generating the weather forecast.

Keller et al. (2024) test their approach in multiple ways. Firstly, they use three different cases – a one-dimensional idealized case, a two-dimensional idealized case and a real-world case. The real-world case is especially interesting because it resembles real-world assimilation, in this case for a small part of Germany. What’s more, they test static and dynamic assimilation – that is, is there a performance difference between assimilating using the same observation locations versus varying the observation locations?

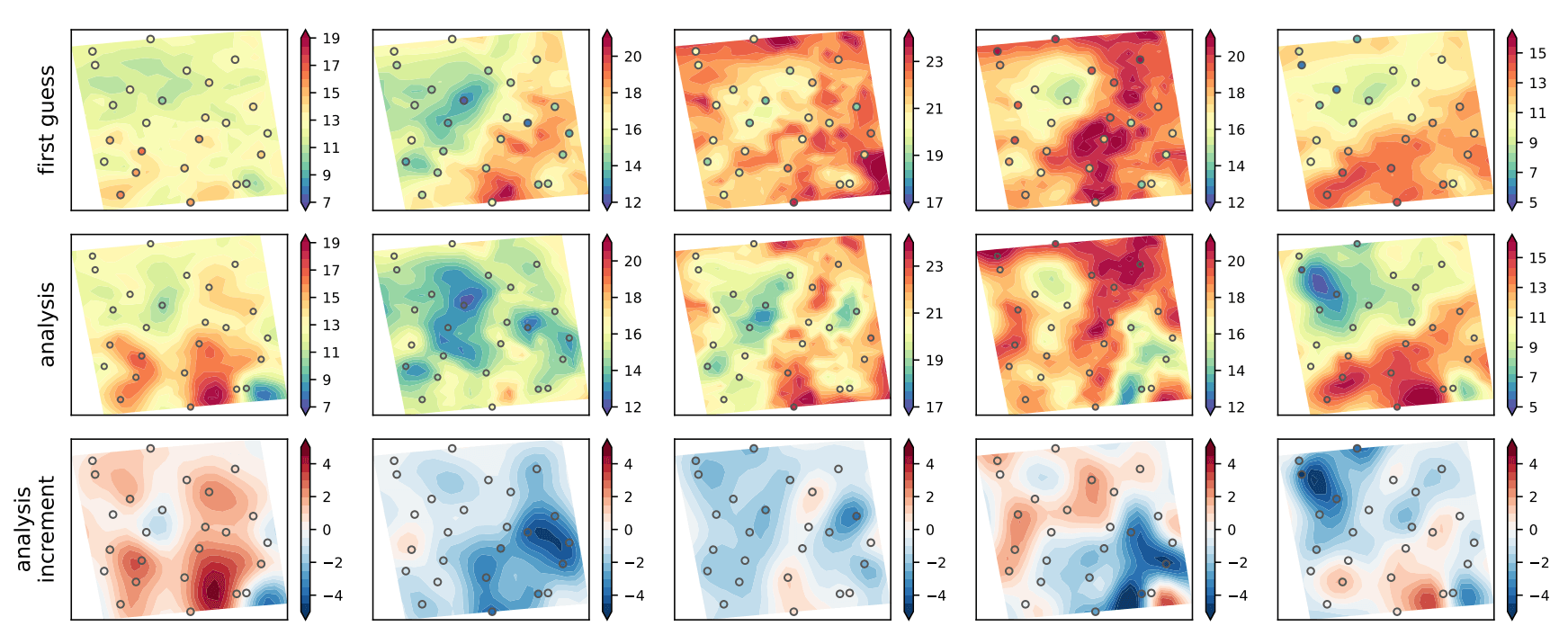

The answer is: not much performance difference. Firstly, they found that AI-VAR is “capable of effectively reconstructing the original state from observations”, thus generating AI-based analyses directly. Results from the real-world experiment, where the mappings for generating the analysis increments for two-meter temperature are learned, are visible in figure 5. The columns are individual weather situations; the first row represents the background field; the last row the increment; the middle the final analysis. For completeness, though, we must note that the experimental setup was relatively limited, both in scope (i.e., the real-world case covered only a small part of Germany) and model complexity (a relatively simple model architecture was used). Thus, there is ample room for future work, improving on these ideas even further.

Figure 5: samples from the real-world experiment as learned by AI-VAR (Keller et al., 2024). Using a first guess and observations, the model learns predicting an analysis increment, which can be applied to the first guess to find the analysis. Image from Keller et al. (2024)

Benefits and limitations of AI-based data assimilation

These relatively recent developments in the field of AI-based weather forecasting bring various benefits, but also limitations. Benefits of AI-based data assimilation include that:

• Observations can be used in weather modelling faster. Recall that speed is one of the strengths of AI-based weather models. As AI-based weather forecasts can sometimes be generated in a matter of minutes, using learned data assimilation systems means that observations could be transformed into analyses in seconds. This helps reduce uncertainty in weather forecasts, especially when considering extreme weather, as the impact of new observations on weather forecasts can be computed in near-real-time.

• The AI forecasting system can be tightly coupled and end-to-end integrated. In the cases above, we have seen that AI-based data assimilation can be integrated tightly with an AI-based weather model. For example, in the case of FuXi-DA, FuXi-based forecasts are used as background fields together with satellite observations. If we remove the dependency on e.g. background fields from NWP models, the dependency on external data sources is reduced, as the system is end-to-end integrated.

Ultimately, recognizing that developments are still in their early days, AI-based data assimilation has the potential to improve AI-based weather forecasts significantly. This comes at various limitations which future research must investigate:

• Assimilation systems become more dependent on observation availability. While missing observations can be dropped in NWP-based data assimilation systems (with sometimes only marginal decreases in performance), this proves to be more difficult in AI-based data assimilation systems, as they require data to be fed in a fixed way. However, this can be mitigated by setting up the assimilation system flexibly, as e.g. demonstrated by the Keller et al. (2024) system.

• New sources of observations require that models are retrained if set up improperly. For example, we have seen an AI-based data assimilation system capable of assimilating satellite images into analyses. The data assimilation model has simply learned to convert background fields and satellite inputs to target analyses. If, say, new types of satellite data become available – whether it’s a new domain, new variables or perhaps even a new satellite with different resolution – model retraining (which can be resource intensive) may be necessary.

• Does direct AI-based data assimilation also work for large domains? We don’t know. Keller et al. (2024) used idealized experimental setups or setups with a very small domain compared to typical weather model domains. Future works should investigate if the technique remains as promising when domains are larger.

What’s next: better capturing extremes with sharper forecasts

So far, our AI series has focused on the first and second generation of AI weather models, how they can be verified and how observations can be used more directly. However, an interesting set of other articles is under way! In the next few ones, we will focus on the following topics:

Better capturing extremes with sharper forecasts

One of the downsides of AI-based weather models is increased blurring further ahead in time as well as difficulty capturing extreme weather events. Like directly assimilating observations into AI-based weather forecasting, new approaches have emerged attempting to mitigate this problem.

AI-based ensemble weather modelling

The weather is inherently uncertain. The speed with which AI-based weather forecasts can be generated enables scenario forecasting with significantly more scenarios to further reduce uncertainty. This is especially valuable in high-risk weather situations, such as when thunderstorms or windstorm systems threaten your operations.

AI-based limited-area modelling

Today’s AI-based weather models are global. Can we also extend AI-based weather forecasting to regional models with higher resolution? The answer is yes, as the first attempts to do this have emerged in research communities. In this article, we take a closer look at limited-area modelling with AI in this article.

References

Google. (2023). Metnet-3: A state-of-the-art neural weather model available in Google products. Google Research - Explore Our Latest Research in Science and AI. https://research.google/blog/metnet-3-a-state-of-the-art-neural-weather-model-available-in-google-products/Huang, L., Gianinazzi, L., Yu, Y., Dueben, P. D., & Hoefler, T. (2024). Diffda: a diffusion model for weather-scale data assimilation. arXiv preprint arXiv:2401.05932.

Keller, J. D., & Potthast, R. (2024). AI-based data assimilation: Learning the functional of analysis estimation. arXiv preprint arXiv:2406.00390.

Manshausen, P., Cohen, Y., Pathak, J., Pritchard, M., Garg, P., Mardani, M., ... & Brenowitz, N. (2024). Generative Data Assimilation of Sparse Weather Station Observations at Kilometer Scales. arXiv preprint arXiv:2406.16947.

Versloot, C. (2024). AI & weather forecasting: Evaluate AI weather models with WeatherBench. Infoplaza - Guiding you to the decision point. https://www.infoplaza.com/en/blog/ai-weather-forecasting-evaluate-ai-weather-models-with-weatherbench

Willemse, A., & Versloot, C. (2024a). AI and weather forecasting: NWP vs MLWP models. Infoplaza - Guiding you to the decision point. https://www.infoplaza.com/en/blog/ai-weather-forecasting-nwp-vs-mlwp-models

Willemse, A., & Versloot, C. (2024b). AI and weather forecasting: The first wave of AI-based weather models. Infoplaza - Guiding you to the decision point. https://www.infoplaza.com/en/blog/ai-weather-forecasting-first-wave-ai-based-weather-models

Willemse, A., & Versloot, C. (2024c). AI and weather forecasting: Second generation of AI weather models. Infoplaza - Guiding you to the decision point. https://www.infoplaza.com/en/blog/ai-weather-forecasting-second-generation-ai-weather-models

WMO. (2023, March 16). COVID-19 impacts observing system. World Meteorological Organization. https://wmo.int/news/media-centre/covid-19-impacts-observing-system

Xiao, Y., Bai, L., Xue, W., Chen, K., Han, T., & Ouyang, W. (2023). Fengwu-4dvar: Coupling the data-driven weather forecasting model with 4d variational assimilation. arXiv preprint arXiv:2312.12455.

Xu, X., Sun, X., Han, W., Zhong, X., Chen, L., & Li, H. (2024). Fuxi-DA: A Generalized Deep Learning Data Assimilation Framework for Assimilating Satellite Observations. arXiv preprint arXiv:2404.08522.